- What ElectroNeek web scraping tool can do: 100% accurate screen scraper for Java, WPF, HTML, PDF, Flash, and more. 97% accurate Screen OCR tool. Precise GUI automation at the level of objects for imitating mouse and data entry process. Fast scraping with a typical duration of less than a second.

- Script website interactions. A runtime, just like Node. Nightmare.js A high-level browser library https: //gi thub.com /segmentio.

Making it non-coder friendly

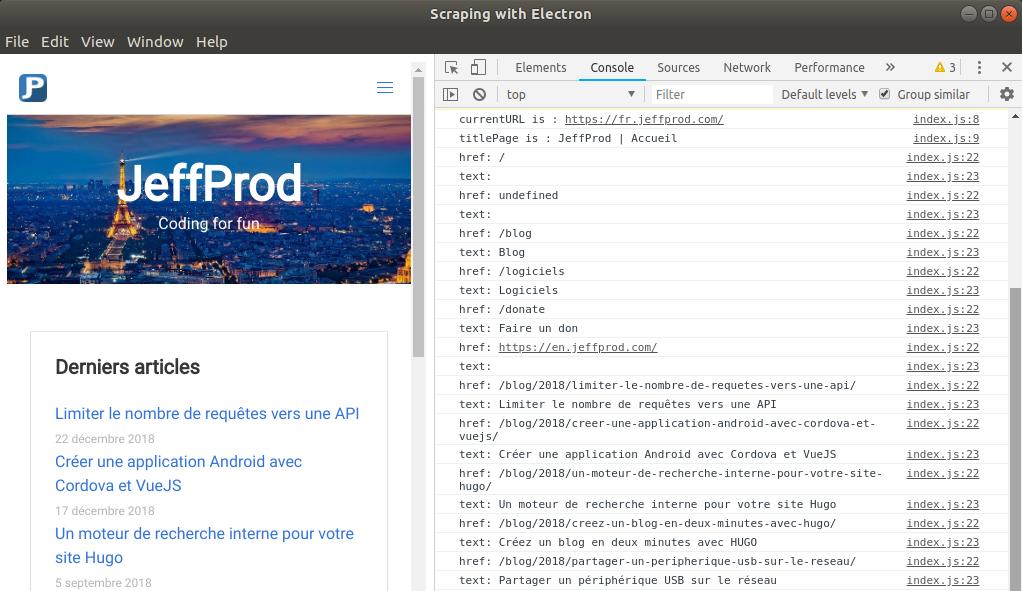

Electron is a framework for creating native Windows/Mac/Linux applications with web technologies (Javascript, HTML, CSS). It includes the browser Chromium, fully configurable. Is there a better way to code a portable application with a graphical user interface to scrape a given site?

The goal with this project was to make the link checker be something that anyone could easily use. Electronjs is a really easy way to do this

Electronjs is getting popular enough that at this point you can search for almost any boiler plate you want to use and it will be available. I am very comfortable with Angular so I ended up using Maxime Gris’ package and it was super well set up.

Code changes

I really didn’t have to do many code changes to get this running. The main one was to copy index.ts into an index-local.ts file that is run with npm start from the link checker script.

The index.ts I just turned into a function that accepts the parameters like export async function deadLinkChecker(desiredDomain: string, desiredIOThreads: any, links: any[]) { . This way I can import the deadLinkChecker function into my electron app after it’s installed as an npm dependency.

Honestly, besides that the rest of the code changes are addressed in the not insignificant amount of problems listed below.

Problems; I got ’em

I would say at this point this project isn’t finished yet. Over the next week I’m going to work on ironing out the few (but pretty big) problems that still exist.

maxSockets ignored on electron?

The first (and probably biggest) problem is that it appears to be ignoring the maxSockets number set when running it from Electron. Although I’ve logged out the request agent and it has the correct number listed in the maxSockets the time returned doesn’t match what I would expect the time to be.

For example, if I set maxSockets to 1, it takes ~35 seconds to complete when I run the script manually. If I run it through Electron, it takes between 17 and 21 seconds. This is the same time it takes when I run it with 4 maxSockets manually or from Electron. The time to complete the job takes the same amount regardless of the maxSockets I set.

This is a problem because if it’s being ignored, it’s just hitting it as fast as it can, which really could act like a DDoS attack. This app is not meant to be a weapon.

Number of maxSockets being cached

In the electron app I have a field where a user can set the number of I/O threads that they want the app to use. The idea is that the more threads used the faster the scraper will go. If I set two threads, it should (see problem above with the number of sockets being ignored) make two requests concurrently and then continue as those two complete.

If I run it a second time and set it to four, my logs show that four is being received but the request agent maxSockets still shows that 2 are being used. I believe this has to do with the fact that the script is still living because the electron app is still living. I’ve tried a few things, like globalAgent.destroy() without any luck. If I do a reload on the app, it will reset and use the (first) number passed in correctly.

Difference in request time in electron vs running it manually

When I built this script, I was setting 10 seconds as my request timeout ceiling. This didn’t seem to be a problem and made sense to me. If a request took longer than 10 seconds to complete, that was a lot longer than any reasonable website should take to complete.

Using this same timeout on the electron app gave a bunch of 999 status (statuses?). If I bumped the timeout to 100 seconds the requests would resolve but it would take a LOT longer. So…that means that the requests are literally taking longer. Drivers qrs diagnostic port devices. Why would it be any different between the electron app and running it manually?

Should I allow adjustment of maxSockets?

As I write this article, I’m trying to decide if I should even have a field for maxSockets that the user can adjust. If I’m trying to limit it so that a user can’t DDoS then I don’t think this is the way to do it. A non engineer using this would probably not understand the danger and would just want more speed and would try to set it as high as possible.

This brings up the point of whether I should try to limit it at all. Should I just let it run as fast as possible and not have the field available? It would certainly fix my above two problems. This could potentially be dangerous but I believe there are other tools that do similar things. Power shock driver download for windows.

I think in the end, I will settle on removing the field option and just set the maxSockets to always be four. Four shouldn’t be enough to DDoS any normal site. If someone really wanted to DDoS a site, there are better tools than this running at four maxSockets. This solves my above caching problem but I’m still curious why it’s happening and so I will probably dig further into that still.

How do I stop the checker?

I really am not sure. To be continued. I’ll do some more research over the next week and see what I can do to stop it. I’ll probably need to set up an API in the link checker and then maybe leverage web workers.

Promise driver download for windows 10. Web scraping is the easiest way to automate the process of extracting data from any website. Puppeteer scrapers can be used when a normal request module based scraper is unable to extract data from a website.

What is Puppeteer?

Puppeteer is a node.js library that provides a powerful but simple API that allows you to control Google’s Chrome or Chromium browser. It also allows you to run Chromium in headless mode (useful for running browsers in servers) and can send and receive requests without the need for a user interface. It works in the background, performing actions as instructed by the API. The developer community for puppeteer is very active and new updates are rolled out regularly. With its full-fledged API, it covers most actions that can be done with a Chrome browser. As of now, it is one of the best options to scrape JavaScript-heavy websites.

What can you do with Puppeteer?

Puppeteer can do almost everything Google Chrome or Chromium can do.

- Click elements such as buttons, links, and images.

- Type like a user in input boxes and automate form submissions

- Navigate pages, click on links, and follow them, go back and forward.

- Take a timeline trace to find out where the issues are in a website.

- Carry out automated testing for user interfaces and various front-end apps, directly in a browser.

- Take screenshots and convert web pages to pdf’s.

Web Scraping using Puppeteer

In this tutorial, we’ll show you how to create a web scraper for Booking.com to scrape the details of hotel listings in a particular city from the first page of results. We will scrape the hotel name, rating, number of reviews, and price for each hotel listing.

Required Tools

To install Puppeteer you need to first install node.js and write the code to control the browser a.k.a scraper in JavaScript. Node.js runs the script and lets you control the Chrome browser using the puppeteer library. Puppeteer requires at least Node v7.6.0 or greater but for this tutorial, we will go with Node v9.0.0.

Installing Node.js

Linux

You can head over to Nodesource and choose the distribution you want. Here are the steps to install node.js in Ubuntu 16.04 :

1. Open a terminal run – sudo apt install curl in case it’s not installed.

2. Then run – curl -sL https://deb.nodesource.com/setup_8.x | sudo -E bash -

3. Once that’s done, install node.js by running, sudo apt install nodejs. This will automatically install npm.

Windows and Mac

To install node.js in Windows or Mac, download the package for your OS from Nodes JS’s website https://nodejs.org/en/download/

Obtaining the URL

Let’s start by obtaining the booking URL. Go to booking.com and search for a city with the inputs for check-in and check-out dates. Click the search button and copy the URL that has been generated. This will be your booking URL.

The gif below shows how to obtain the booking URL for hotels available in Singapore.

After you have completed the installation of node.js we will install the project requirements, which will also download the puppeteer library that will be used in the scraper. Download both the files app.js and package.json from below and place it inside a folder. We have named our folder booking_scraper.

The script below is the scraper. We have named it app.js. This script will scrape the results for a single listing page:

The script below is package.json which contains the libraries needed to run the scraper

Installing the project dependencies, which will also install Puppeteer.

- Install the project directory and make sure it has the

package.jsonfile inside it. - Use

npm installto install the dependencies. This will also install puppeteer and download the Chromium browser to run the puppeteer code. By default, puppeteer works with the Chromium browser but you can also use Chrome.

Now copy the URL that was generated from booking.com and paste it in the bookingUrl variable in the provided space (line 3 in app.js). You should make sure the URL is inserted within quotes otherwise, the script will not work.

Running the Puppeteer Scraper

To run a node.js program you need to type:

For this script, it will be:

Turning off Headless Mode

The script above runs the browser in headless mode. To turn the headless mode off, just modify this line

const browser = await puppeteer.launch({ headless: true }); to const browser = await puppeteer.launch({ headless: false});

You should then be able to see what is going on.

The program will run and fetch all the hotel details and display it in the terminal. If you want to scrape another page you can change the URL in the bookingUrl variable and run the program again.

Here is how the output for hotels in Singapore will look like:

Debug Using Screenshots

In case you are stuck, you could always try taking a screenshot of the webpage and see if you are being blocked or if the structure of the website has changed. Here is something to get started

Learn More:

Speed Up Puppeteer Web Scraping

Loading a web page with images could slow down web scraping due to reduced page speed. To speed up browsing and data scraping, disabling CSS and images could help with that while also reducing bandwidth consumption.

Learn More:

Known Limitations

When using Puppeteer you should keep some things in mind. Since Puppeteer opens up a browser it takes a lot of memory and CPU to run in comparison to script-based approaches like Selenium for JavaScript.

If you want to scrape a simple website that does not use JavaScript-heavy frontends, use a simple Python Scraper. There are plenty of open source javascript web scraping tools you can try such as Apidfy SDK, Nodecrawler, Playwright, and more.

You will find Puppeteer to be a bit slow as it only opens one page at a time and starts scraping a page once it has been fully loaded. Pupetteer scripts can only be written in JavaScript and do not support any other language.

If you need professional help with scraping complex websites, contact us by filling up the form below.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data

Electron Js Web Scraping Tutorial

Disclaimer:

Electron Js Alternatives

Any code provided in our tutorials is for illustration and learning purposes only. We are not responsible for how it is used and assume no liability for any detrimental usage of the source code. The mere presence of this code on our site does not imply that we encourage scraping or scrape the websites referenced in the code and accompanying tutorial. The tutorials only help illustrate the technique of programming web scrapers for popular internet websites. We are not obligated to provide any support for the code, however, if you add your questions in the comments section, we may periodically address them.

Comments are closed.